Machine learning approaches for sound separation and vocal segmentation

Our goal is to advance the understanding of cultural learning in songbirds. Over the past years, we have gathered extensive datasets from pair-housed birds engaged in courtship and mating behaviors and from young birds learning their songs by interaction with their parents. To make sense of these longitudinal recordings, we need to solve increasingly complex sound separation and vocal segmentation problems. Our goal is to identify for each animal the times at which it is vocalizing and to reliably distinguish these events from other types of sounds including the vocalizations from other animals.

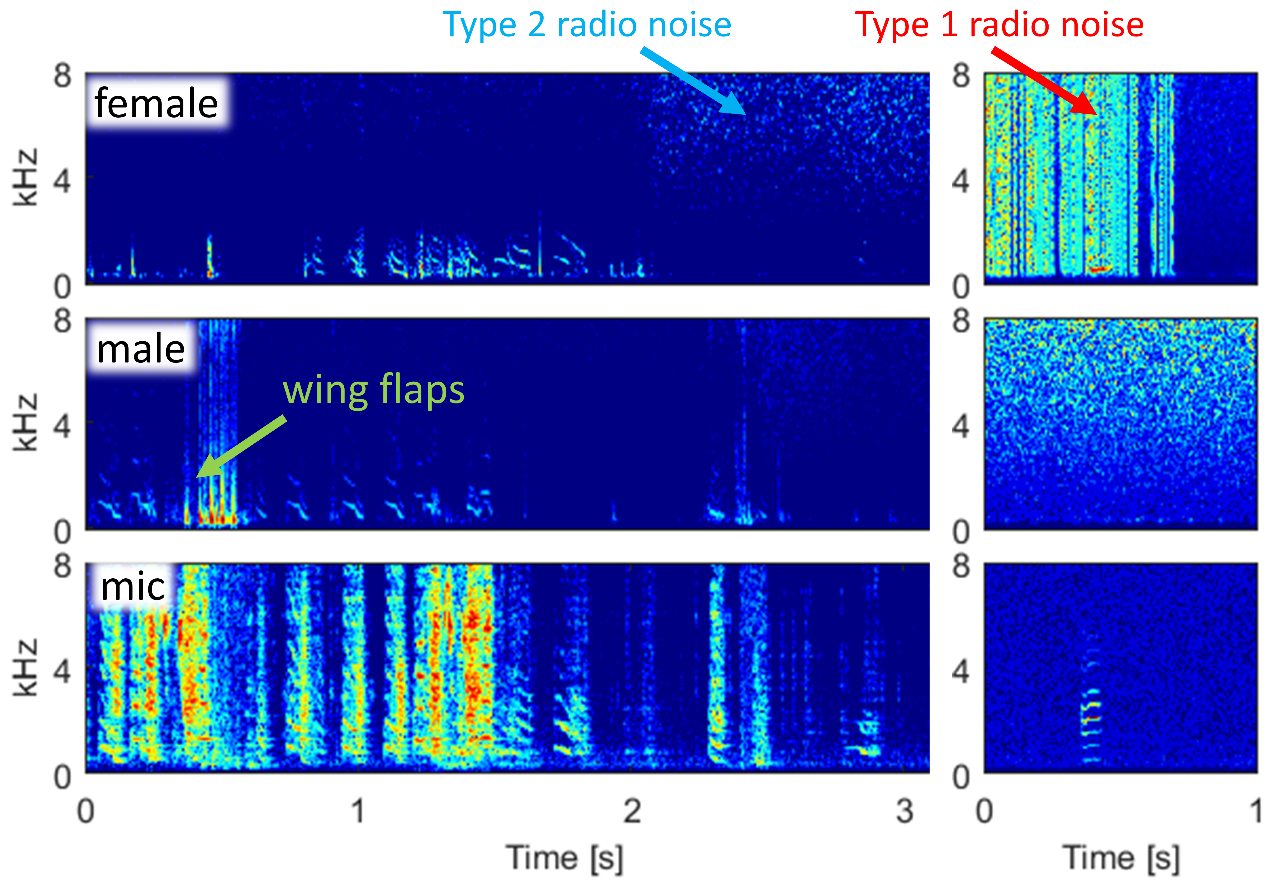

Our birds are housed together in acoustically isolated chambers, their sounds are recorded with a single wall-mounted microphones and their movements are observed with high-resolution cameras from three orthogonal viewpoints. Each bird also wears an accelerometer that provides distinct signals of its body movements including low-frequency vibrations associated with vocalizations. These latter animal-borne signals are useful for separating the vocal behaviors of an individual in a social setting.

Accelerometer signals have properties that render them more difficult to analyze than microphone signals: they only monitor low-frequency vibrations, are affected by radio noise, and wing flaps mask vocal signals.

We have started to develop a semi-supervised machine learning system that can annotate a single channel using minimal expert-labelled data. To avoid annotation errors stemming from various sources of noise in a single channel, we want to take advantage of the information contained in the other channels. Our current approach is to extent the input and output space of our machine learning system and to learn all channel annotations simultaneously.

Student Project

If you are interested in this project for an MSc Thesis or Semester Project, please get in touch with Kanghwi Lee

Reference

- Ter Maat, A., et al. (2014). Zebra finch mates use their forebrain song system in unlearned call communication. PloS one 9.10: e109334