Scientific Argument Mining

Retrieving Arguments from Scientific Papers as an expanded Queries Task

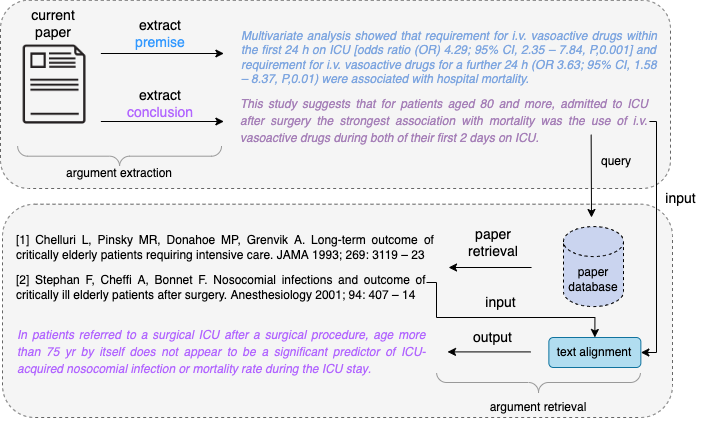

In scientific research, scholars write papers in which they make arguments. Usually, the main argument of a paper is made in the abstract, where the conclusion from the study is drawn and where the main premises are stated in support of the conclusion. Further supporting arguments of the main conclusion can usually be found in the main text. However, often, some relevant arguments or even just their premises are not explicitly stated as may be customary in respective field of research, which can create the need for an inexperienced reader to search more information about such implicit premises. How can we tailor literature search to specifically support the discovery of arguments and of their premises?

Although using keywords as query is precise and convenient, retrieval with only keywords often reaches low recall (1,2,3). First there is the question of how to recognize an argument and its constituent parts, the conclusion and its premises, from a given text? We argue that argument retrieval in scientific papers is an essential problem that deserves attention.

Most existing literature retrieval systems are keyword-only search engines (4,5,6). We are interested in developing end-to-end argument retrieval systems on large data sets of scientific papers. For example, such a system should take the main conclusion as input and produce in the output the most relevant externally retrieved premises and interim arguments.

The pipeline begins with extracting argumentative text from scientific papers. We will explore diverse NLP-driven approaches (both neural networks that operate with carefully learned embeddings (7,8) and unsupervised models that work on argumentative features (9,10)) to identify argument components in scientific papers. From the extracted arguments, we will use computational text alignment techniques to find the most relevant arguments in external papers. Finally, keyword-based queries expanded with multiple contexts will be used to retrieve the most relevant external arguments.

As a starting point of the data to be used, we will work with the Semantic Scholar Open Research Corpus (S2ORC (11)), which is a collection of over 136 M English language academic papers. In addition, nearly 12 M papers in the S2ORC dataset have well-curated metadata, abstract, and bibliography available, among which about 8 M papers have full-body text.

Student Project

If you are interested in this project for an MSc Thesis or Semester Project, please get in touch with Yingqiang Gao

References

1. Hinrich Schuetze, Christopher D Manning, and Prabhakar Raghavan. Introduction to infor- mation retrieval, volume 39. Cambridge University Press Cambridge, 2008.

2. James Allan. Hard track overview in trec 2003 high accuracy retrieval from documents. Technical report, MASSACHUSETTS UNIV AMHERST CENTER FOR INTELLIGENT INFORMATION RETRIEVAL, 2005.

3. Aris Anagnostopoulos, Andrei Broder, and Kunal Punera. Effective and efficient classi- fication on a search-engine model. Knowledge and information systems, 16(2):129–154, 2008.

4. Joshua M Nicholson, Milo Mordaunt, Patrice Lopez, Ashish Uppala, Domenic Rosati, Neves P Rodrigues, Peter Grabitz, and Sean Rife. scite: a smart citation index that displays the context of citations and classifies their intent using deep learning. bioRxiv, 2021.

5. K Mueen Ahmed and Bandar Al Dhubaib. Zotero: A bibliographic assistant to researcher. Journal of Pharmacology and Pharmacotherapeutics, 2(4):303, 2011.

6. Accessing RefWorks. Refworks. 2009.

7. Iz Beltagy, Kyle Lo, and Arman Cohan. Scibert: A pretrained language model for scientific text. arXiv preprint arXiv:1903.10676, 2019.

8. Iz Beltagy, Matthew E Peters, and Arman Cohan. Longformer: The long-document trans- former. arXiv preprint arXiv:2004.05150, 2020.

9. John Lawrence and Chris Reed. Combining argument mining techniques. In Proceedings of the 2nd Workshop on Argumentation Mining, pages 127–136, 2015.

10. Simone Teufel, Advaith Siddharthan, and Colin Batchelor. Towards domain-independent argumentative zoning: Evidence from chemistry and computational linguistics. In Pro- ceedings of the 2009 conference on empirical methods in natural language processing, pages 1493–1502, 2009.

11. Kyle Lo, Lucy Lu Wang, Mark Neumann, Rodney Kinney, and Dan S Weld. S2orc: The semantic scholar open research corpus. arXiv preprint arXiv:1911.02782, 2019.